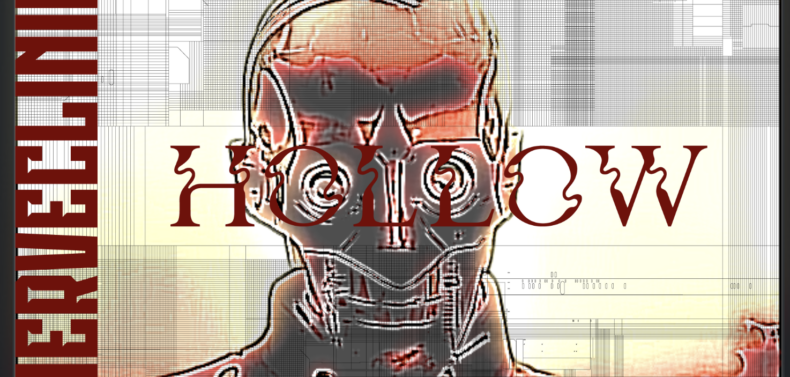

Nerveclinic, the music-producer-moniker of multidisciplinary artist Monty Greene, has just released a music video for the song “Hollow” that incorporates AI-generated imagery. The video demonstrates how AI technologies can be used as a creative tool to provide source material for artists to edit and otherwise add a human touch.

A pioneer of industrial music in the mid-’80s, Greene was a member of Athens’ first industrial band, Damage Report, that was notorious for combining dark computer-generated sounds with jarring live performances. Soon after, he launched Nerveclinic, which continues to create original computer-based music alongside experimental videos. Now as the music curator and host of Sonic Space at the Athens Institute for Contemporary Art, Greene continues to celebrate thought-provoking approaches to music. His own rare live performances use his videos as a backdrop on stage.

Since the early days of the COVID-19 pandemic—an event that catalyzed his relocation from Brooklyn back to Athens after a 30-year break—Greene has focused on creating music videos that feel more like short art films. One such video, “Gray Clouds,” incorporates footage referencing the virus in Wuhan, China in January 2020, shortly before the virus became a global pandemic. This body of work can be explored on his YouTube channel.

Below, check out the video for “Hollow,” followed by a Q&A with Greene in which he discusses his creative process and thoughts on AI.

Flagpole: What was the process like for creating this music video? What intrigued you about using AI?

Monty Greene: I use a lot of “found sources” from Youtube and TikTok as material for my music videos and I would call the style a collage of images. I don’t just use found images in their present form, I put them into a video editing program and add lots of color and effects and lots of overlays to “make them my own.”

I became interested in using AI in my videos—my first video with any AI—because I was seeing incredible progress in the field on TikTok. Also a style of AI that fit with my artistic vision (Dark Electronic Music). I also found these images very unique, almost a new style, and I was excited to share that with my audience.

So the process for creating this video was finding lots of AI material from 5-6 sources, Cutting them into pieces to change with the beat, and then going through the painstaking work of editing and altering the look.

FP: What are your general current feelings about AI? Where do you think the balance is between AI’s ability to serve as a creative tool versus AI’s ability to negatively impact or replace human artists?

MG: I have very strong thoughts that AI can be a very bad thing for art, but can be a very good thing when used properly by an artist as a tool. The bad is if a person who has no artistic talents starts using AI to create completely new music. The AI has learned to create a song by listening to other musicians’ style/music and copying or plagiarizing it. This is in my opinion wrong and may be found to be illegal due to copyright laws, and there will be many court cases fighting it. Hopefully this will bring in rules and updated copyright laws. There will be entertainment corporations who use it to replace human creativity to save money… very scary.

On the other hand, if you look at the images in my video, both the original AI and my edits have a very unique style. I don’t really see these images as being derivative in any meaningful, recognizable way. What do they remind you of? I can’t think of anything. I took the original images, which I think were pretty fresh already, and I spent many, many hours editing and altering them. This video took several months, several nights a week of work to finish, mostly sourcing and then editing the AI. I feel that I have honestly created something new from this with a unique style similar to the style in my non-AI videos.

FP: When did you start making music as Nerveclinic? Do you find any parallel between using AI today with how you used computers to generate sounds early on with Damage Report back in the ‘80s?

MG: Well Nerveclinic was the name of a band I was in after Damage Report around 1988-1990. Then I took a break from creating music and started DJing techno/electro and continued using Nerveclinic as my DJ name (I’m DJing techno at a private party this weekend). Then about 15 years ago, I switched back to creating music and once again used the name Nerveclinic.

Let’s clear up part of your question, I don’t use any AI in any of my music, nor will I. All the music is created using synthesizers in a computer, and I play all of it using a traditional keyboard that plugs into the computer, and I play the music with my hands like any traditional musician. The computer is basically a recording studio that allows me to use 4-6-10 synths in one song.

AI is only in the graphics in the video. I am very much opposed to using AI to create music. I feel strongly that writing songs is a job for real humans because what the AI is doing is basically plagiarizing music that was written originally by humans. I am open-minded though if someone thinks they can convince me otherwise.

So the way I make music today is very similar to what we were doing with Damage Report as early as 1984 when my band mate John Whitaker used a computer on stage during live shows at the 40 Watt. Again he played all the music, using a synthesizer into the computer, the computer wrote nothing on its own. It was always fun to watch new faces stumble into the Watt who had never even seen a computer before, let alone heard music using it, they really were confused.

Like what you just read? Support Flagpole by making a donation today. Every dollar you give helps fund our ongoing mission to provide Athens with quality, independent journalism.